Diffuse Materials

Now that we have objects and multiple rays per pixel, we can make some realistic looking materials. We’ll start with diffuse (matte) materials. One question is whether we mix and match geometry and materials (so we can assign a material to multiple spheres, or vice versa) or if geometry and material are tightly bound (that could be useful for procedural objects where the geometry and material are linked). We’ll go with separate — which is usual in most renderers — but do be aware of the limitation.

A Simple Diffuse Material

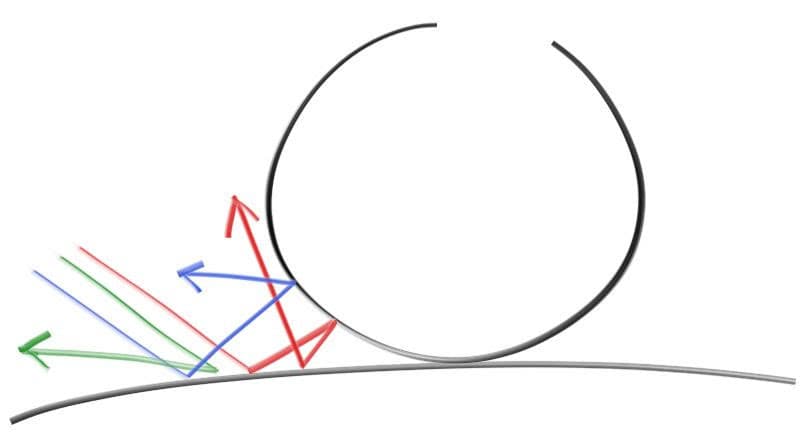

Diffuse objects that don’t emit light merely take on the color of their surroundings, but they modulate that with their own intrinsic color. Light that reflects off a diffuse surface has its direction randomized. So, if we send three rays into a crack between two diffuse surfaces they will each have different random behavior:

They also might be absorbed rather than reflected. The darker the surface, the more likely absorption is. (That’s why it is dark!) Really any algorithm that randomizes direction will produce surfaces that look matte.

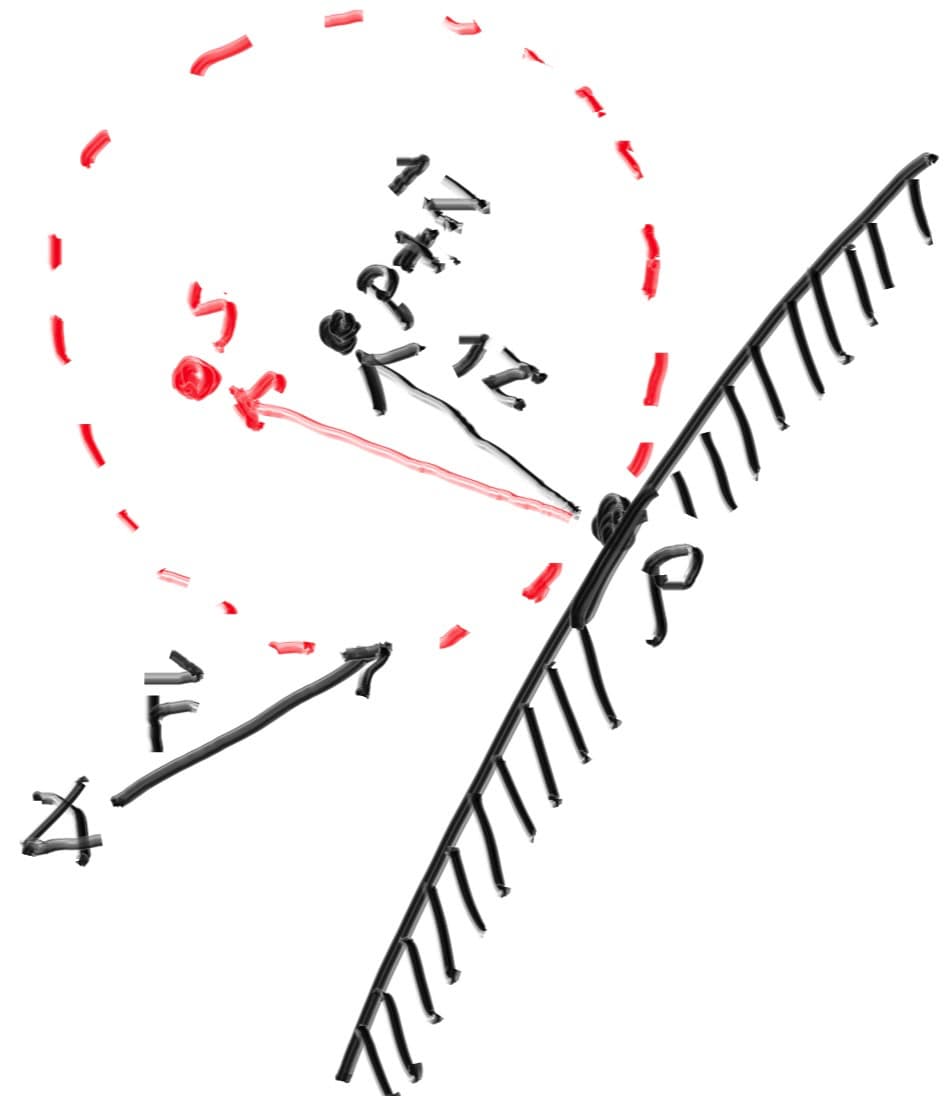

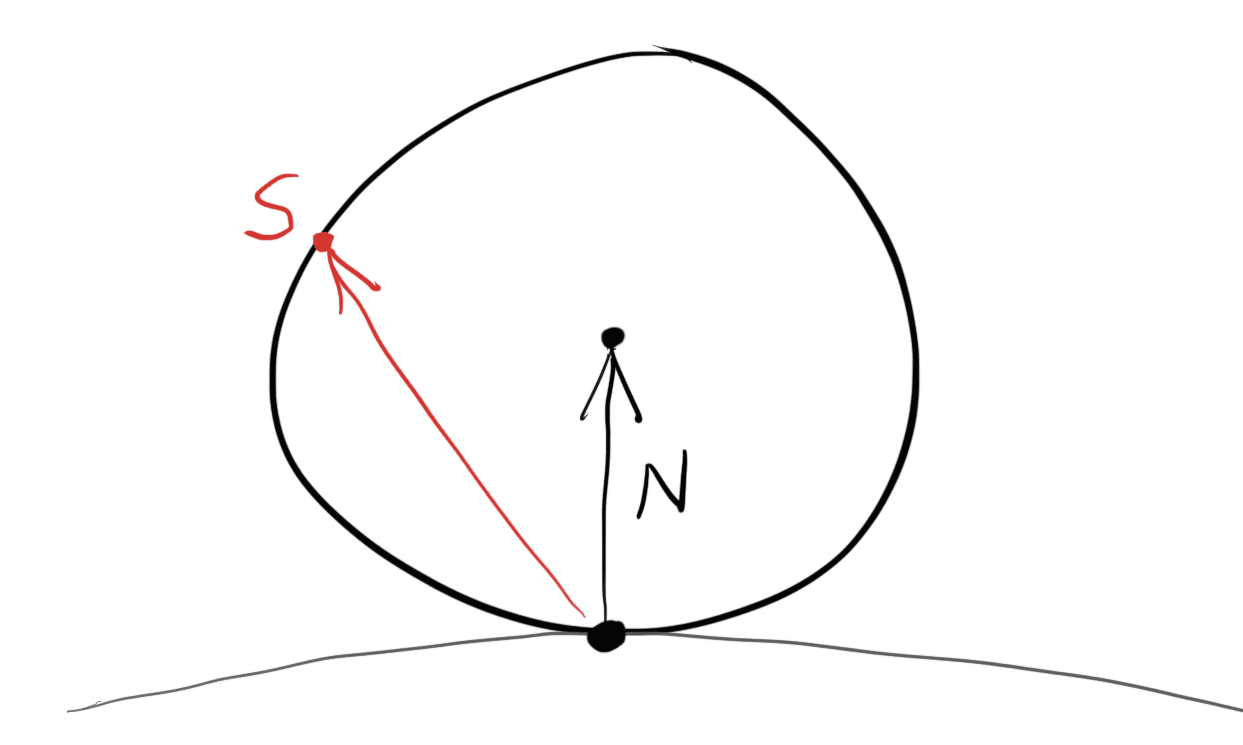

There are two unit radius spheres tangent to the hit point of a surface. These two spheres have a center of and , where is the normal of the surface. The sphere with a center at is considered inside the surface, whereas the sphere with center is considered outside the surface.

Let’s select the tangent unit radius sphere that is on the same side of the surface as the ray origin. Pick a random point inside this unit radius sphere and send a ray from the hit point to the random point (this is the vector ):

We need a way to pick a random point in a unit radius sphere. We’ll use what is usually the easiest algorithm: a rejection method. First, pick a random point in the unit cube where x, y, and z all range from -1 to +1. Reject this point and try again if the point is outside the sphere.

use std::fmt::{Display, Formatter, Result};

use std::ops::{Add, AddAssign, Div, DivAssign, Mul, MulAssign, Neg, Sub};

use crate::common;

#[derive(Copy, Clone, Default)]

pub struct Vec3 {

e: [f64; 3],

}

impl Vec3 {

pub fn new(x: f64, y: f64, z: f64) -> Vec3 {

Vec3 { e: [x, y, z] }

}

pub fn random() -> Vec3 {

Vec3::new(

common::random_double(),

common::random_double(),

common::random_double(),

)

}

pub fn random_range(min: f64, max: f64) -> Vec3 {

Vec3::new(

common::random_double_range(min, max),

common::random_double_range(min, max),

common::random_double_range(min, max),

)

}

pub fn x(&self) -> f64 {

self.e[0]

}

pub fn y(&self) -> f64 {

self.e[1]

}

pub fn z(&self) -> f64 {

self.e[2]

}

pub fn length(&self) -> f64 {

f64::sqrt(self.length_squared())

}

pub fn length_squared(&self) -> f64 {

self.e[0] * self.e[0] + self.e[1] * self.e[1] + self.e[2] * self.e[2]

}

}

// Type alias

pub type Point3 = Vec3;

// Output formatting

impl Display for Vec3 {

fn fmt(&self, f: &mut Formatter) -> Result {

write!(f, "{} {} {}", self.e[0], self.e[1], self.e[2])

}

}

// -Vec3

impl Neg for Vec3 {

type Output = Vec3;

fn neg(self) -> Vec3 {

Vec3::new(-self.x(), -self.y(), -self.z())

}

}

// Vec3 += Vec3

impl AddAssign for Vec3 {

fn add_assign(&mut self, v: Vec3) {

*self = *self + v;

}

}

// Vec3 *= f64

impl MulAssign<f64> for Vec3 {

fn mul_assign(&mut self, t: f64) {

*self = *self * t;

}

}

// Vec3 /= f64

impl DivAssign<f64> for Vec3 {

fn div_assign(&mut self, t: f64) {

*self = *self / t;

}

}

// Vec3 + Vec3

impl Add for Vec3 {

type Output = Vec3;

fn add(self, v: Vec3) -> Vec3 {

Vec3::new(self.x() + v.x(), self.y() + v.y(), self.z() + v.z())

}

}

// Vec3 - Vec3

impl Sub for Vec3 {

type Output = Vec3;

fn sub(self, v: Vec3) -> Vec3 {

Vec3::new(self.x() - v.x(), self.y() - v.y(), self.z() - v.z())

}

}

// Vec3 * Vec3

impl Mul for Vec3 {

type Output = Vec3;

fn mul(self, v: Vec3) -> Vec3 {

Vec3::new(self.x() * v.x(), self.y() * v.y(), self.z() * v.z())

}

}

// f64 * Vec3

impl Mul<Vec3> for f64 {

type Output = Vec3;

fn mul(self, v: Vec3) -> Vec3 {

Vec3::new(self * v.x(), self * v.y(), self * v.z())

}

}

// Vec3 * f64

impl Mul<f64> for Vec3 {

type Output = Vec3;

fn mul(self, t: f64) -> Vec3 {

Vec3::new(self.x() * t, self.y() * t, self.z() * t)

}

}

// Vec3 / f64

impl Div<f64> for Vec3 {

type Output = Vec3;

fn div(self, t: f64) -> Vec3 {

Vec3::new(self.x() / t, self.y() / t, self.z() / t)

}

}

pub fn dot(u: Vec3, v: Vec3) -> f64 {

u.e[0] * v.e[0] + u.e[1] * v.e[1] + u.e[2] * v.e[2]

}

pub fn cross(u: Vec3, v: Vec3) -> Vec3 {

Vec3::new(

u.e[1] * v.e[2] - u.e[2] * v.e[1],

u.e[2] * v.e[0] - u.e[0] * v.e[2],

u.e[0] * v.e[1] - u.e[1] * v.e[0],

)

}

pub fn unit_vector(v: Vec3) -> Vec3 {

v / v.length()

}use std::fmt::{Display, Formatter, Result};

use std::ops::{Add, AddAssign, Div, DivAssign, Mul, MulAssign, Neg, Sub};

use crate::common;

#[derive(Copy, Clone, Default)]

pub struct Vec3 {

e: [f64; 3],

}

impl Vec3 {

pub fn new(x: f64, y: f64, z: f64) -> Vec3 {

Vec3 { e: [x, y, z] }

}

pub fn random() -> Vec3 {

Vec3::new(

common::random_double(),

common::random_double(),

common::random_double(),

)

}

pub fn random_range(min: f64, max: f64) -> Vec3 {

Vec3::new(

common::random_double_range(min, max),

common::random_double_range(min, max),

common::random_double_range(min, max),

)

}

pub fn x(&self) -> f64 {

self.e[0]

}

pub fn y(&self) -> f64 {

self.e[1]

}

pub fn z(&self) -> f64 {

self.e[2]

}

pub fn length(&self) -> f64 {

f64::sqrt(self.length_squared())

}

pub fn length_squared(&self) -> f64 {

self.e[0] * self.e[0] + self.e[1] * self.e[1] + self.e[2] * self.e[2]

}

}

// Type alias

pub type Point3 = Vec3;

// Output formatting

impl Display for Vec3 {

fn fmt(&self, f: &mut Formatter) -> Result {

write!(f, "{} {} {}", self.e[0], self.e[1], self.e[2])

}

}

// -Vec3

impl Neg for Vec3 {

type Output = Vec3;

fn neg(self) -> Vec3 {

Vec3::new(-self.x(), -self.y(), -self.z())

}

}

// Vec3 += Vec3

impl AddAssign for Vec3 {

fn add_assign(&mut self, v: Vec3) {

*self = *self + v;

}

}

// Vec3 *= f64

impl MulAssign<f64> for Vec3 {

fn mul_assign(&mut self, t: f64) {

*self = *self * t;

}

}

// Vec3 /= f64

impl DivAssign<f64> for Vec3 {

fn div_assign(&mut self, t: f64) {

*self = *self / t;

}

}

// Vec3 + Vec3

impl Add for Vec3 {

type Output = Vec3;

fn add(self, v: Vec3) -> Vec3 {

Vec3::new(self.x() + v.x(), self.y() + v.y(), self.z() + v.z())

}

}

// Vec3 - Vec3

impl Sub for Vec3 {

type Output = Vec3;

fn sub(self, v: Vec3) -> Vec3 {

Vec3::new(self.x() - v.x(), self.y() - v.y(), self.z() - v.z())

}

}

// Vec3 * Vec3

impl Mul for Vec3 {

type Output = Vec3;

fn mul(self, v: Vec3) -> Vec3 {

Vec3::new(self.x() * v.x(), self.y() * v.y(), self.z() * v.z())

}

}

// f64 * Vec3

impl Mul<Vec3> for f64 {

type Output = Vec3;

fn mul(self, v: Vec3) -> Vec3 {

Vec3::new(self * v.x(), self * v.y(), self * v.z())

}

}

// Vec3 * f64

impl Mul<f64> for Vec3 {

type Output = Vec3;

fn mul(self, t: f64) -> Vec3 {

Vec3::new(self.x() * t, self.y() * t, self.z() * t)

}

}

// Vec3 / f64

impl Div<f64> for Vec3 {

type Output = Vec3;

fn div(self, t: f64) -> Vec3 {

Vec3::new(self.x() / t, self.y() / t, self.z() / t)

}

}

pub fn dot(u: Vec3, v: Vec3) -> f64 {

u.e[0] * v.e[0] + u.e[1] * v.e[1] + u.e[2] * v.e[2]

}

pub fn cross(u: Vec3, v: Vec3) -> Vec3 {

Vec3::new(

u.e[1] * v.e[2] - u.e[2] * v.e[1],

u.e[2] * v.e[0] - u.e[0] * v.e[2],

u.e[0] * v.e[1] - u.e[1] * v.e[0],

)

}

pub fn unit_vector(v: Vec3) -> Vec3 {

v / v.length()

}

pub fn random_in_unit_sphere() -> Vec3 {

loop {

let p = Vec3::random_range(-1.0, 1.0);

if p.length_squared() >= 1.0 {

continue;

}

return p;

}

}Then update the ray_color() function to use the new random direction generator:

mod camera;

mod color;

mod common;

mod hittable;

mod hittable_list;

mod ray;

mod sphere;

mod vec3;

use std::io;

use camera::Camera;

use color::Color;

use hittable::{HitRecord, Hittable};

use hittable_list::HittableList;

use ray::Ray;

use sphere::Sphere;

use vec3::Point3;

fn ray_color(r: &Ray, world: &dyn Hittable) -> Color {

let mut rec = HitRecord::new();

if world.hit(r, 0.0, common::INFINITY, &mut rec) {

let direction = rec.normal + vec3::random_in_unit_sphere();

return 0.5 * ray_color(&Ray::new(rec.p, direction), world);

}

let unit_direction = vec3::unit_vector(r.direction());

let t = 0.5 * (unit_direction.y() + 1.0);

(1.0 - t) * Color::new(1.0, 1.0, 1.0) + t * Color::new(0.5, 0.7, 1.0)

}

fn main() {

// Image

const ASPECT_RATIO: f64 = 16.0 / 9.0;

const IMAGE_WIDTH: i32 = 400;

const IMAGE_HEIGHT: i32 = (IMAGE_WIDTH as f64 / ASPECT_RATIO) as i32;

const SAMPLES_PER_PIXEL: i32 = 100;

// World

let mut world = HittableList::new();

world.add(Box::new(Sphere::new(Point3::new(0.0, 0.0, -1.0), 0.5)));

world.add(Box::new(Sphere::new(Point3::new(0.0, -100.5, -1.0), 100.0)));

// Camera

let cam = Camera::new();

// Render

print!("P3\n{} {}\n255\n", IMAGE_WIDTH, IMAGE_HEIGHT);

for j in (0..IMAGE_HEIGHT).rev() {

eprint!("\rScanlines remaining: {} ", j);

for i in 0..IMAGE_WIDTH {

let mut pixel_color = Color::new(0.0, 0.0, 0.0);

for _ in 0..SAMPLES_PER_PIXEL {

let u = (i as f64 + common::random_double()) / (IMAGE_WIDTH - 1) as f64;

let v = (j as f64 + common::random_double()) / (IMAGE_HEIGHT - 1) as f64;

let r = cam.get_ray(u, v);

pixel_color += ray_color(&r, &world);

}

color::write_color(&mut io::stdout(), pixel_color, SAMPLES_PER_PIXEL);

}

}

eprint!("\nDone.\n");

}Limiting the Number of Child Rays

There’s one potential problem lurking here. Notice that the ray_color function is recursive. When will it stop recursing? When it fails to hit anything. In some cases, however, that may be a long time — long enough to blow the stack. To guard against that, let’s limit the maximum recursion depth, returning no light contribution at the maximum depth:

mod camera;

mod color;

mod common;

mod hittable;

mod hittable_list;

mod ray;

mod sphere;

mod vec3;

use std::io;

use camera::Camera;

use color::Color;

use hittable::{HitRecord, Hittable};

use hittable_list::HittableList;

use ray::Ray;

use sphere::Sphere;

use vec3::Point3;

fn ray_color(r: &Ray, world: &dyn Hittable, depth: i32) -> Color {

// If we've exceeded the ray bounce limit, no more light is gathered

if depth <= 0 {

return Color::new(0.0, 0.0, 0.0);

}

let mut rec = HitRecord::new();

if world.hit(r, 0.0, common::INFINITY, &mut rec) {

let direction = rec.normal + vec3::random_in_unit_sphere();

return 0.5 * ray_color(&Ray::new(rec.p, direction), world, depth - 1);

}

let unit_direction = vec3::unit_vector(r.direction());

let t = 0.5 * (unit_direction.y() + 1.0);

(1.0 - t) * Color::new(1.0, 1.0, 1.0) + t * Color::new(0.5, 0.7, 1.0)

}

fn main() {

// Image

const ASPECT_RATIO: f64 = 16.0 / 9.0;

const IMAGE_WIDTH: i32 = 400;

const IMAGE_HEIGHT: i32 = (IMAGE_WIDTH as f64 / ASPECT_RATIO) as i32;

const SAMPLES_PER_PIXEL: i32 = 100;

const MAX_DEPTH: i32 = 50;

// World

let mut world = HittableList::new();

world.add(Box::new(Sphere::new(Point3::new(0.0, 0.0, -1.0), 0.5)));

world.add(Box::new(Sphere::new(Point3::new(0.0, -100.5, -1.0), 100.0)));

// Camera

let cam = Camera::new();

// Render

print!("P3\n{} {}\n255\n", IMAGE_WIDTH, IMAGE_HEIGHT);

for j in (0..IMAGE_HEIGHT).rev() {

eprint!("\rScanlines remaining: {} ", j);

for i in 0..IMAGE_WIDTH {

let mut pixel_color = Color::new(0.0, 0.0, 0.0);

for _ in 0..SAMPLES_PER_PIXEL {

let u = (i as f64 + common::random_double()) / (IMAGE_WIDTH - 1) as f64;

let v = (j as f64 + common::random_double()) / (IMAGE_HEIGHT - 1) as f64;

let r = cam.get_ray(u, v);

pixel_color += ray_color(&r, &world, MAX_DEPTH);

}

color::write_color(&mut io::stdout(), pixel_color, SAMPLES_PER_PIXEL);

}

}

eprint!("\nDone.\n");

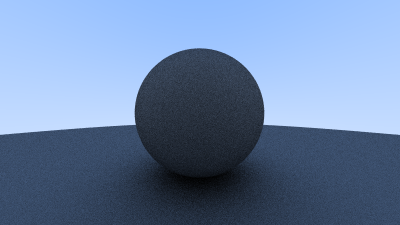

}This gives us:

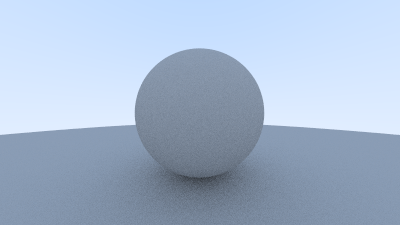

Using Gamma Correction for Accurate Color Intensity

Note the shadowing under the sphere. This picture is very dark, but our spheres only absorb half the energy on each bounce, so they are 50% reflectors. If you can’t see the shadow, don’t worry, we will fix that now. These spheres should look pretty light (in real life, a light grey). The reason for this is that almost all image viewers assume that the image is “gamma corrected”, meaning the 0 to 1 values have some transform before being stored as a byte. There are many good reasons for that, but for our purposes we just need to be aware of it. To a first approximation, we can use “gamma 2” which means raising the color to the power , or in our simple case ½, which is just square-root:

use std::io::Write;

use crate::common;

use crate::vec3::Vec3;

// Type alias

pub type Color = Vec3;

pub fn write_color(out: &mut impl Write, pixel_color: Color, samples_per_pixel: i32) {

let mut r = pixel_color.x();

let mut g = pixel_color.y();

let mut b = pixel_color.z();

// Divide the color by the number of samples and gamma-correct for gamma=2.0

let scale = 1.0 / samples_per_pixel as f64;

r = f64::sqrt(scale * r);

g = f64::sqrt(scale * g);

b = f64::sqrt(scale * b);

// Write the translated [0, 255] value of each color component

writeln!(

out,

"{} {} {}",

(256.0 * common::clamp(r, 0.0, 0.999)) as i32,

(256.0 * common::clamp(g, 0.0, 0.999)) as i32,

(256.0 * common::clamp(b, 0.0, 0.999)) as i32,

)

.expect("writing color");

}That yields light grey, as we desire:

Fixing Shadow Acne

There’s also a subtle bug in there. Some of the reflected rays hit the object they are reflecting off of not at exactly , but instead at or or whatever floating point approximation the sphere intersector gives us. So we need to ignore hits very near zero:

mod camera;

mod color;

mod common;

mod hittable;

mod hittable_list;

mod ray;

mod sphere;

mod vec3;

use std::io;

use camera::Camera;

use color::Color;

use hittable::{HitRecord, Hittable};

use hittable_list::HittableList;

use ray::Ray;

use sphere::Sphere;

use vec3::Point3;

fn ray_color(r: &Ray, world: &dyn Hittable, depth: i32) -> Color {

// If we've exceeded the ray bounce limit, no more light is gathered

if depth <= 0 {

return Color::new(0.0, 0.0, 0.0);

}

let mut rec = HitRecord::new();

if world.hit(r, 0.001, common::INFINITY, &mut rec) {

let direction = rec.normal + vec3::random_in_unit_sphere();

return 0.5 * ray_color(&Ray::new(rec.p, direction), world, depth - 1);

}

let unit_direction = vec3::unit_vector(r.direction());

let t = 0.5 * (unit_direction.y() + 1.0);

(1.0 - t) * Color::new(1.0, 1.0, 1.0) + t * Color::new(0.5, 0.7, 1.0)

}

fn main() {

// Image

const ASPECT_RATIO: f64 = 16.0 / 9.0;

const IMAGE_WIDTH: i32 = 400;

const IMAGE_HEIGHT: i32 = (IMAGE_WIDTH as f64 / ASPECT_RATIO) as i32;

const SAMPLES_PER_PIXEL: i32 = 100;

const MAX_DEPTH: i32 = 50;

// World

let mut world = HittableList::new();

world.add(Box::new(Sphere::new(Point3::new(0.0, 0.0, -1.0), 0.5)));

world.add(Box::new(Sphere::new(Point3::new(0.0, -100.5, -1.0), 100.0)));

// Camera

let cam = Camera::new();

// Render

print!("P3\n{} {}\n255\n", IMAGE_WIDTH, IMAGE_HEIGHT);

for j in (0..IMAGE_HEIGHT).rev() {

eprint!("\rScanlines remaining: {} ", j);

for i in 0..IMAGE_WIDTH {

let mut pixel_color = Color::new(0.0, 0.0, 0.0);

for _ in 0..SAMPLES_PER_PIXEL {

let u = (i as f64 + common::random_double()) / (IMAGE_WIDTH - 1) as f64;

let v = (j as f64 + common::random_double()) / (IMAGE_HEIGHT - 1) as f64;

let r = cam.get_ray(u, v);

pixel_color += ray_color(&r, &world, MAX_DEPTH);

}

color::write_color(&mut io::stdout(), pixel_color, SAMPLES_PER_PIXEL);

}

}

eprint!("\nDone.\n");

}This gets rid of the shadow acne problem. Yes it is really called that.

True Lambertian Reflection

The rejection method presented here produces random points in the unit ball offset along the surface normal. This corresponds to picking directions on the hemisphere with high probability close to the normal, and a lower probability of scattering rays at grazing angles. This distribution scales by the where is the angle from the normal. This is useful since light arriving at shallow angles spreads over a larger area, and thus has a lower contribution to the final color.

However, we are interested in a Lambertian distribution, which has a distribution of True Lambertian has the probability higher for ray scattering close to the normal, but the distribution is more uniform. This is achieved by picking random points on the surface of the unit sphere, offset along the surface normal. Picking random points on the unit sphere can be achieved by picking random points in the unit sphere, and then normalizing those.

use std::fmt::{Display, Formatter, Result};

use std::ops::{Add, AddAssign, Div, DivAssign, Mul, MulAssign, Neg, Sub};

use crate::common;

#[derive(Copy, Clone, Default)]

pub struct Vec3 {

e: [f64; 3],

}

impl Vec3 {

pub fn new(x: f64, y: f64, z: f64) -> Vec3 {

Vec3 { e: [x, y, z] }

}

pub fn random() -> Vec3 {

Vec3::new(

common::random_double(),

common::random_double(),

common::random_double(),

)

}

pub fn random_range(min: f64, max: f64) -> Vec3 {

Vec3::new(

common::random_double_range(min, max),

common::random_double_range(min, max),

common::random_double_range(min, max),

)

}

pub fn x(&self) -> f64 {

self.e[0]

}

pub fn y(&self) -> f64 {

self.e[1]

}

pub fn z(&self) -> f64 {

self.e[2]

}

pub fn length(&self) -> f64 {

f64::sqrt(self.length_squared())

}

pub fn length_squared(&self) -> f64 {

self.e[0] * self.e[0] + self.e[1] * self.e[1] + self.e[2] * self.e[2]

}

}

// Type alias

pub type Point3 = Vec3;

// Output formatting

impl Display for Vec3 {

fn fmt(&self, f: &mut Formatter) -> Result {

write!(f, "{} {} {}", self.e[0], self.e[1], self.e[2])

}

}

// -Vec3

impl Neg for Vec3 {

type Output = Vec3;

fn neg(self) -> Vec3 {

Vec3::new(-self.x(), -self.y(), -self.z())

}

}

// Vec3 += Vec3

impl AddAssign for Vec3 {

fn add_assign(&mut self, v: Vec3) {

*self = *self + v;

}

}

// Vec3 *= f64

impl MulAssign<f64> for Vec3 {

fn mul_assign(&mut self, t: f64) {

*self = *self * t;

}

}

// Vec3 /= f64

impl DivAssign<f64> for Vec3 {

fn div_assign(&mut self, t: f64) {

*self = *self / t;

}

}

// Vec3 + Vec3

impl Add for Vec3 {

type Output = Vec3;

fn add(self, v: Vec3) -> Vec3 {

Vec3::new(self.x() + v.x(), self.y() + v.y(), self.z() + v.z())

}

}

// Vec3 - Vec3

impl Sub for Vec3 {

type Output = Vec3;

fn sub(self, v: Vec3) -> Vec3 {

Vec3::new(self.x() - v.x(), self.y() - v.y(), self.z() - v.z())

}

}

// Vec3 * Vec3

impl Mul for Vec3 {

type Output = Vec3;

fn mul(self, v: Vec3) -> Vec3 {

Vec3::new(self.x() * v.x(), self.y() * v.y(), self.z() * v.z())

}

}

// f64 * Vec3

impl Mul<Vec3> for f64 {

type Output = Vec3;

fn mul(self, v: Vec3) -> Vec3 {

Vec3::new(self * v.x(), self * v.y(), self * v.z())

}

}

// Vec3 * f64

impl Mul<f64> for Vec3 {

type Output = Vec3;

fn mul(self, t: f64) -> Vec3 {

Vec3::new(self.x() * t, self.y() * t, self.z() * t)

}

}

// Vec3 / f64

impl Div<f64> for Vec3 {

type Output = Vec3;

fn div(self, t: f64) -> Vec3 {

Vec3::new(self.x() / t, self.y() / t, self.z() / t)

}

}

pub fn dot(u: Vec3, v: Vec3) -> f64 {

u.e[0] * v.e[0] + u.e[1] * v.e[1] + u.e[2] * v.e[2]

}

pub fn cross(u: Vec3, v: Vec3) -> Vec3 {

Vec3::new(

u.e[1] * v.e[2] - u.e[2] * v.e[1],

u.e[2] * v.e[0] - u.e[0] * v.e[2],

u.e[0] * v.e[1] - u.e[1] * v.e[0],

)

}

pub fn unit_vector(v: Vec3) -> Vec3 {

v / v.length()

}

pub fn random_in_unit_sphere() -> Vec3 {

loop {

let p = Vec3::random_range(-1.0, 1.0);

if p.length_squared() >= 1.0 {

continue;

}

return p;

}

}

pub fn random_unit_vector() -> Vec3 {

unit_vector(random_in_unit_sphere())

}

This random_unit_vector() is a drop-in replacement for the existing random_in_unit_sphere() function.

mod camera;

mod color;

mod common;

mod hittable;

mod hittable_list;

mod ray;

mod sphere;

mod vec3;

use std::io;

use camera::Camera;

use color::Color;

use hittable::{HitRecord, Hittable};

use hittable_list::HittableList;

use ray::Ray;

use sphere::Sphere;

use vec3::Point3;

fn ray_color(r: &Ray, world: &dyn Hittable, depth: i32) -> Color {

// If we've exceeded the ray bounce limit, no more light is gathered

if depth <= 0 {

return Color::new(0.0, 0.0, 0.0);

}

let mut rec = HitRecord::new();

if world.hit(r, 0.001, common::INFINITY, &mut rec) {

let direction = rec.normal + vec3::random_unit_vector();

return 0.5 * ray_color(&Ray::new(rec.p, direction), world, depth - 1);

}

let unit_direction = vec3::unit_vector(r.direction());

let t = 0.5 * (unit_direction.y() + 1.0);

(1.0 - t) * Color::new(1.0, 1.0, 1.0) + t * Color::new(0.5, 0.7, 1.0)

}

fn main() {

// Image

const ASPECT_RATIO: f64 = 16.0 / 9.0;

const IMAGE_WIDTH: i32 = 400;

const IMAGE_HEIGHT: i32 = (IMAGE_WIDTH as f64 / ASPECT_RATIO) as i32;

const SAMPLES_PER_PIXEL: i32 = 100;

const MAX_DEPTH: i32 = 50;

// World

let mut world = HittableList::new();

world.add(Box::new(Sphere::new(Point3::new(0.0, 0.0, -1.0), 0.5)));

world.add(Box::new(Sphere::new(Point3::new(0.0, -100.5, -1.0), 100.0)));

// Camera

let cam = Camera::new();

// Render

print!("P3\n{} {}\n255\n", IMAGE_WIDTH, IMAGE_HEIGHT);

for j in (0..IMAGE_HEIGHT).rev() {

eprint!("\rScanlines remaining: {} ", j);

for i in 0..IMAGE_WIDTH {

let mut pixel_color = Color::new(0.0, 0.0, 0.0);

for _ in 0..SAMPLES_PER_PIXEL {

let u = (i as f64 + common::random_double()) / (IMAGE_WIDTH - 1) as f64;

let v = (j as f64 + common::random_double()) / (IMAGE_HEIGHT - 1) as f64;

let r = cam.get_ray(u, v);

pixel_color += ray_color(&r, &world, MAX_DEPTH);

}

color::write_color(&mut io::stdout(), pixel_color, SAMPLES_PER_PIXEL);

}

}

eprint!("\nDone.\n");

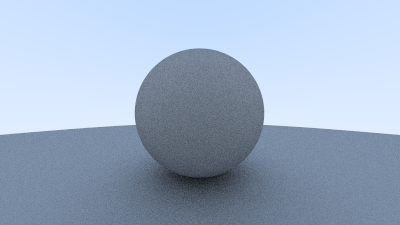

}After rendering we get a similar image:

It’s hard to tell the difference between these two diffuse methods, given that our scene of two spheres is so simple, but you should be able to notice two important visual differences:

-

The shadows are less pronounced after the change

-

Both spheres are lighter in appearance after the change

Both of these changes are due to the more uniform scattering of the light rays, fewer rays are scattering toward the normal. This means that for diffuse objects, they will appear lighter because more light bounces toward the camera. For the shadows, less light bounces straight-up, so the parts of the larger sphere directly underneath the smaller sphere are brighter.